How to build an AI chatbot with no code - Flowise AI

I've been developing iterative versions of an interactive chatflow that draws from the essays, videos, and newsletter issues I've created using AI. You can chat with it directly on this website by clicking the dedicated widget in the bottom right corner.

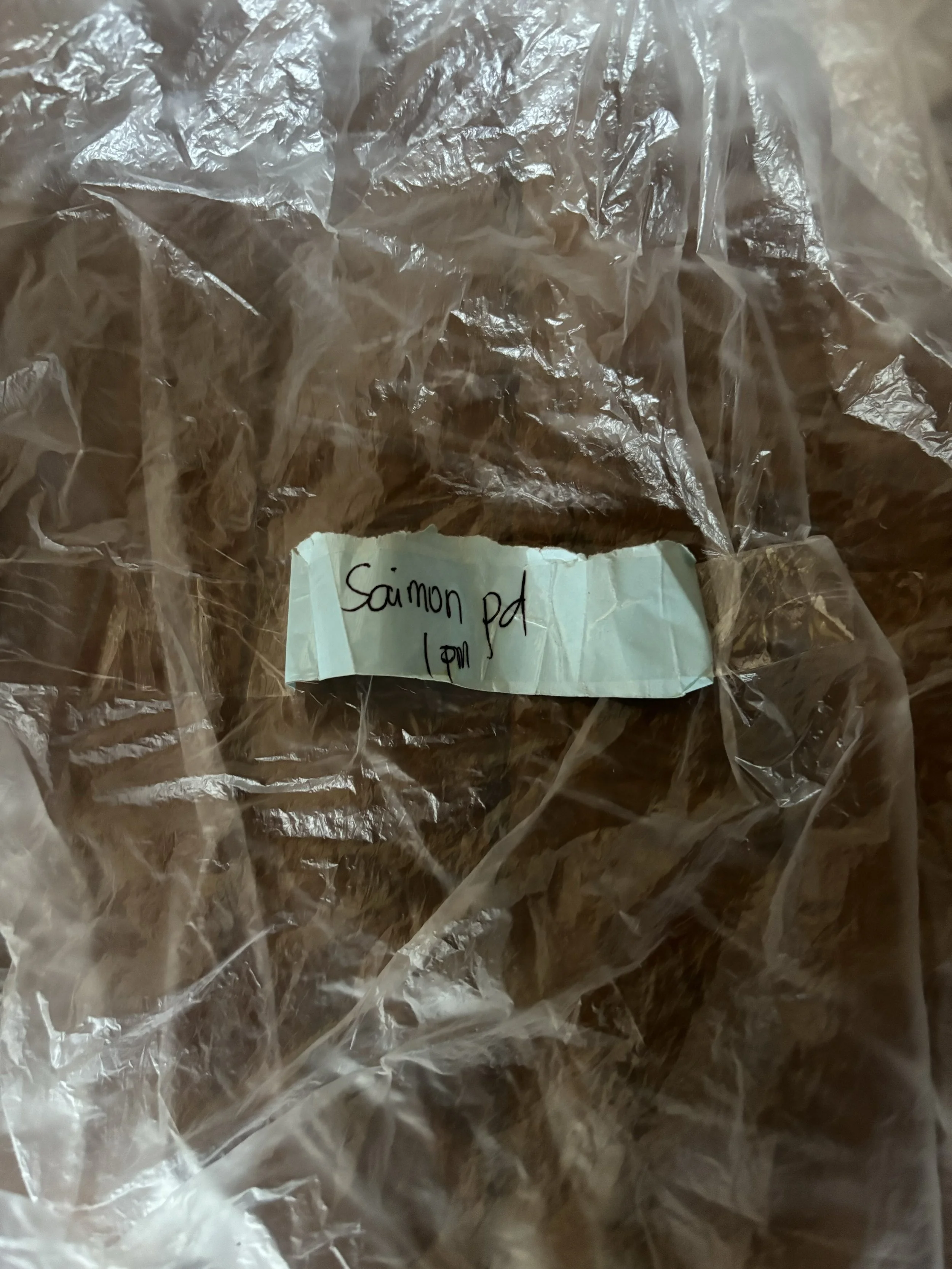

I named the chatbot "Saimon" because it contains "AI" in its spelling and sounds similar to my name (Simon). The idea came from an amusing experience at a laundry shop in the Philippines. When I went to collect my clothes, I noticed the plastic bag had my name spelled as "Saimon." I chuckled, realizing it would be a perfect name for the chatbot I was developing.

The (empty) laundry bag with the name spelling that sparked the idea - “Saimon pd (paid) - 1pm (the time of collection the next day)”

I decided to venture into the project of building an AI chatbot out of interest for the AI trend, so that I could experience first-hand what it looks like to develop AI-based tools, starting from a very accessible use-case that would not be excessively ahead of my current skills.

I was also developing an AI chatbot for one of my partners, as part of an app development project, so that made it a great time to experiment with the new technology and deepen my expertise to understand Artificial Intelligence at a deeper level and incorporate it into my services and products.

On the functional side of things, building a chatbot that would be able to interact with other people using my writings and video transcripts seems like an interesting idea to me.

I also acknowledge that this is only one of many approaches to building an AI chatbot or application and that it may not be the most efficient or effective. With that in mind, here are the key steps I followed:

Create an API automation to save content from my website, YouTube, and newsletters into a Google Sheets

Send the content from the Google Sheets into a vector database (Supabase)

Create a SQL query to retrieve the content based on the Embeddings similarity score

Build a chat flow in Flowise to retrieve the content based on Embeddings and reply to the user accordingly

Monitor the chat flow analytics and performance using Langfuse

Deploy the chat flow on my website

Create an API automation to save content from my website, YouTube, and my newsletters into a Google Sheets

The first step in the process is saving my published content to a Google Sheet for easy retrieval and manipulation via API. I created three automations in Make to accomplish this. The video above demonstrates one of these automations, which saves videos from my YouTube channel to the Google Sheet.

The other two automations save content from my website and newsletter. For the website, I use the RSS feed as a trigger to pull newly published essays. For the newsletter, I use the Gmail module as a trigger, with filters to select only emails from a specific address used exclusively for sending my newsletter. While the triggers differ, the subsequent automation steps explained in the video apply to all three automation.

Create an API automation to send the content from the Google Sheets into a vector database (Supabase)

The second stage involves a weekly automation that transfers content from Google Sheets to a Supabase table named "documents." The video above provides a detailed explanation of this automated workflow.

The Supabase table includes an "embedding" vector type column, which is crucial for the chatbot's functionality. This column is used in the SQL query to retrieve relevant data during user interactions.

The "embedding" column is populated through the automation process. An OpenAI Embeddings API call generates the embedding, converting each piece of content into a vector embedding using the text-embedding-3-small model.

Create a SQL query to retrieve the content based on the Embeddings similarity score

In Supabase, I created an SQL function that works well with Flowise, following this guide. You can find the full function here and below. For the function to work, you will need these three columns:

“embedding” (vector (1536))

metadata (jsonb)

content (text)

-- Enable the pgvector extension to work with embedding vectors

create extension vector;

-- Create a table to store your documents

create table documents (

id text primary key,

content text,

metadata jsonb,

embedding vector(1536)

);

-- Create a function to search for documents

create function match_documents (

query_embedding vector(1536),

match_count int DEFAULT null,

filter jsonb DEFAULT '{}'

) returns table (

id text,

content text,

metadata jsonb,

similarity float

)

language plpgsql

as $$

#variable_conflict use_column

begin

return query

select

id,

content,

metadata,

1 - (documents.embedding <=> query_embedding) as similarity

from documents

where metadata @> filter

order by documents.embedding <=> query_embedding

limit match_count;

end;

$$;

Build a chatflow in Flowise to retrieve the content based on Embeddings and reply to the user accordingly

Next, I created the chatflow in Flowise. The video above explains all the details. Here's the logic and nodes included in the chatflow:

OpenAI Embeddings: This node generates an embedding from the user's message, allowing us to pass it to our SQL query and retrieve relevant content. I used the text-embedding-3-small model to match the 1536 size of my Supabase embedding vector column.

Supabase: This node uses the SQL function from the previous section to retrieve the most relevant content based on the user's query.

ChatOpenAI: Using the gpt-4o model, this node provides LLM capabilities to answer the user's query effectively.

Conversational retrieval QA chain: This final node outputs the answer to the user, based on gpt-4o's general knowledge and the Supabase vector store retriever. I customized the prompt of this node to tailor it to my desired chatbot type.

After testing locally, I deployed the chatflow on a server using Render for production use. Since then, I've been continuously iterating on the QA chain prompt to enhance its accuracy and effectiveness.

Track chatflow analytics/functioning using Langfuse

In the video above, I demonstrate how I use Langfuse to analyze and enhance the AI chatbot built with Flowise. Langfuse is a free tool that integrates directly with my Flowise chatbot and tracks all interactions and underlying functions within each exchange. It offers detailed traces of every conversation, including user inputs, chatbot responses, and backend processes. I find it useful for troubleshooting and improving the chatbot's accuracy. Additionally, it provides insight into the cost of each interaction and the OpenAI API calls, and it has a handy tool to keep track of multiple prompt versions.

To set up Langfuse:

In Flowise, navigate to chatflow settings, select "Configuration," then "Analyze Chatflow"

Select Langfuse and input your credentials (API keys and endpoint)

Enable the connection and save the chatflow

I've also created an automation to transfer Langfuse data to Notion, allowing me to easily access the key metrics I want to monitor from Langfuse.

Deploy the chatflow on a website

Finally, I embedded the chatflow on my website using the dedicated Embed code found in the Flowise chatbot's "API endpoint" section. You can toggle on "Show Embed Chat Config" to customize the design and functionality of the embedded chatbot. For even more extensive customization, you can fork the repository and create your own version.

You can find the embed code from the dedicated menu at the top right corner of the Flowise chatflow